Broken Flowers (variations)

Granted early access to DALL·E in April 2022, Ridler began experimenting with how this new mode of image generation might operate through the motif of tulips. The resulting works were produced during an early, unstable phase of the model’s development, when errors and bugs were still visible within the system. In particular, they capture a brief moment in which the watermark applied to all DALL·E-generated images was no longer recognised as a watermark by the model itself, and instead became something to be learned, repeated, and propagated across the images. The title of the work reflects this moment of technical breakdown while also referencing the term used to describe the striped patterns found in tulips. In doing so, it links the work back to the history of tulip mania, Ridler’s earlier projects with the flower, and the wider cycles of speculation and hype surrounding artificial intelligence.

Process and Research

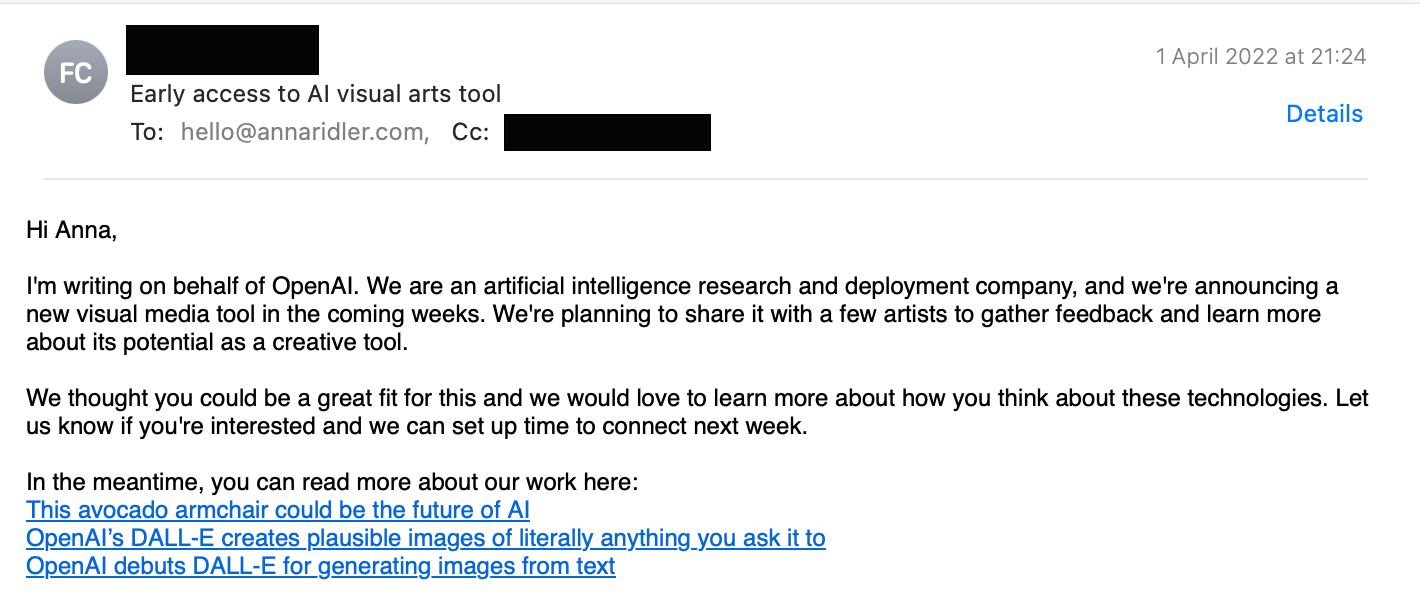

Conceptually there is a significant difference between how GAN imagery is created and how text-to image diffusion models produce outputs. GANs, working through a competitive process between a generator which produces images, and a discriminator, which evaluates them against the dataset, emphasise process, constraint and proximity to source material. I was also able to construct all parts of it myself from the training material through to the model and understand, as much as possible, how things came to be. Systems such as Dalle, on the other hand, are large multimodal diffusion models that produce images through abstraction. They are trained on huge amounts of paired image and text data (millions and millions, impossible to create at the scale of a single artist) which is then used to generate images by progressively denoising visual information guided by natural language prompts. Language behaves differently - rather than functioning primarily as a limited hook of categorisation (as it often does in GANs), it is central to how the models operate, translating linguistic descriptions into visual form by drawing on broad, generalised associations. This allows for much greater flexibility and combinatorial range, but at the same time distances the system from any legible or traceable dataset or material context and masks the complexity of the systems behind it. Despite the early access, it took me a long time to work out how to work with the aesthetic and philosophical possibilities of this technology and is something that I am still thinking through.