Let Me Dream Again

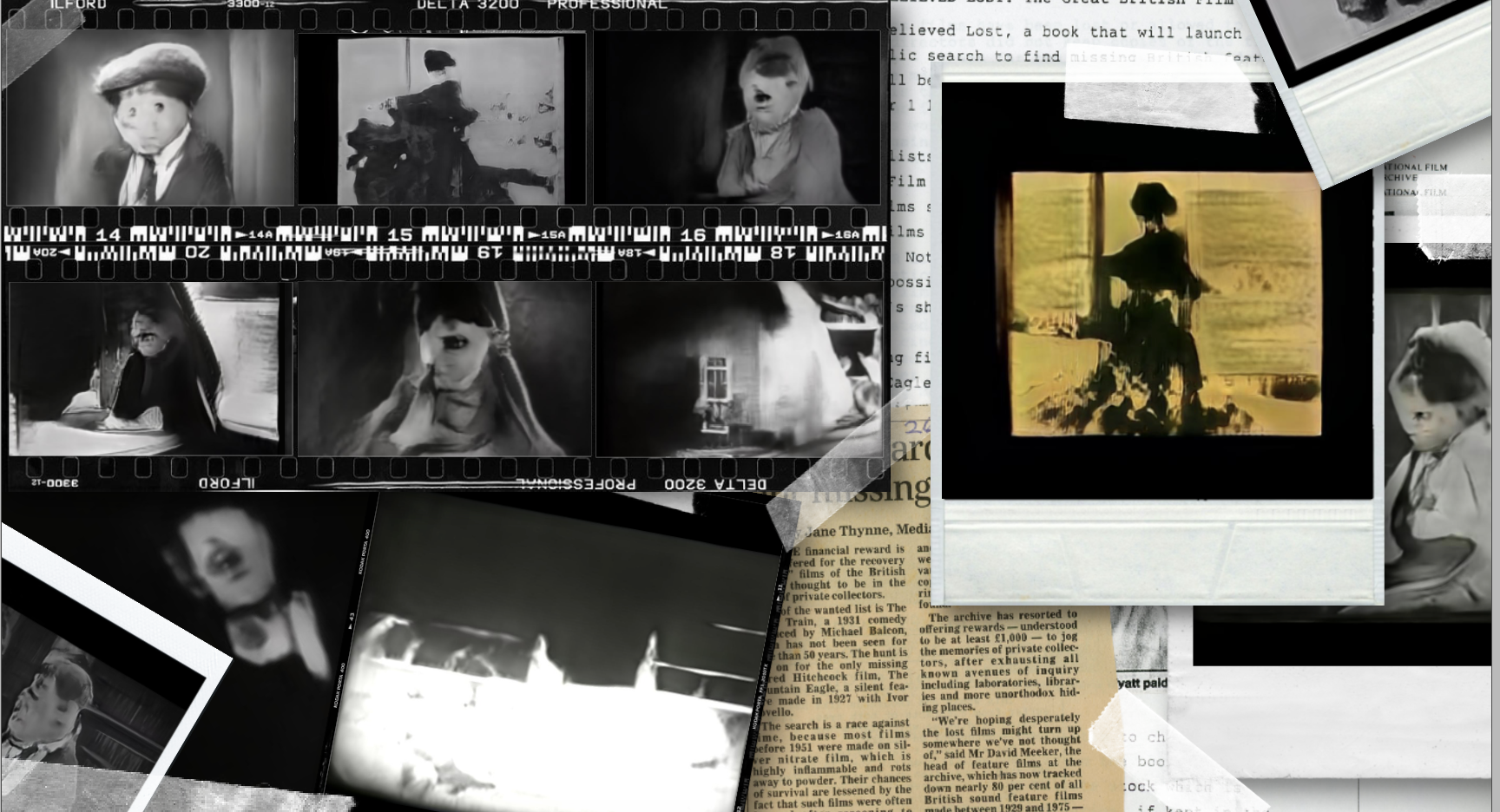

Let Me Dream Again is a series of experiments that use machine learning to try to recreate lost films from the fragments of early cinema from Hollywood and Western Europe that still exist and can be found on the internet. While previous work I have made has tried to use machine learning as a disintegration loop, destroying what was there until no meaning was left, I wanted to see if it was possible to do the opposite: to remake something that has disappeared. Some of these experiments are on this page, including an endlessly evolving, algorithmically generated film which exists only on this website. There are strong parallels between early cinema and imagery generated by machine learning. Early film-makers had to invent a new visual language, much like artists working with machine learning are doing today. Similarly, machine learning work often places heavy emphasis on the machine that created the visuals, rather than the visuals themselves, also an echo of early cinema. Both were considered niche technologies in their infancies, and both try to record and reflect the world as seen by those in control, at times exposing implicit bias or more critical views. GANs are a way to experiment with this space that overlays past with present, real with dreamlike. GANs generate images which, when put together to create a film, move in a strange, unearthly way which defy the rules of logic on how objects and people should behave. However these same characteristics make it very challenging to build a film with a clear narrative out of what was generated. The gifs that are now playing came from an experiment where a new film was made, which contains a shadow film of stills from the original cinema clips, that sits behind the GAN generated film, moving in and out of the foreground. The process blends the past and present, real and dreamlike, recutting and reconfiguring one hundred year old footage to join together things that shouldn't be joined, yet at the same time creating a structure and a coherence.

Process and Research

Ideas about dreaming are embedded throughout the project. When we dream our brain uses this sensory data as the raw material from which to recreate a detailed and internally coherent world. In machine learning, the algorithms generating the film take in similar 'real' imagery from the dataset to build up its own picture of the world and what it means. Although, like dreams, this picture is internally coherent based on the original input, the program is a warped and imperfect reflection of the real world, full of uncanny and weird moments. Because of this, perhaps, it has become common for the output of a GAN to be described as the algorithm 'dreaming' or 'hallucinating'. As tempting as it is to anthropomorphize this process and make links between our own experience and what comes out of the machine: a GAN does dream, not like we do. The actual mechanism is far more prosaic: repeated applications of the same simple operations that something like a video game engine uses. But linguistically a connection has been made, even by scientists. The idea of dreaming or how to represent dreams has always been part of the history of cinema. The title of the project, Let Me Dream Again, refers back to a Victorian film of the same name which is thought to be the first known instance of a dreamscape in cinema and a transition between dreams and reality. Many directors have used dreams and dreamlike elements as part of their work - film theory uses the word 'oneiric' to talk about these sequences and how they collapse the gap, as one artist writes, of being 'awake and falling asleep'. It is also a word used by the scientific community to describe research into dreams, which looks at, amongst other things, how the brain works during dreaming, and how this relates to memory formation (some hypothesize that they help consolidate memories). Given how neuroscience and concepts of memory have been prominent in the development of deep learning, using dreams as a way of constructing meaning seems appropriate.

Project Credits

Supported by Google Artists + Machine Intelligence, Google Research, and Google Arts & Culture. Technical support from Holly Grimm and Parag Mital, Graphic Design from Seung Eun Baek and Sound by Ben Heim. The Website was made by Eoghan O'Keeffe (epok.tech).

Dissemination

The website for this work is https://artsexperiments.withgoogle.com/let-me-dream-again/